ComfyUI: Inpainting

5.0

0 reviewsDescription

Inpainting is a task of reconstructing missing areas in an image, that is, redrawing or filling in details in missing or damaged areas of an image. It is an important problem in computer vision and a basic feature in many image and graphics applications, such as object removal, image repair, processing, relocation, synthesis, and image-based rendering. It is commonly used for repairing damage in photos, removing unwanted objects, etc. Additionally, Outpainting is essentially a form of image repair, similar in principle to Inpainting.

Inpainting Methods in ComfyUI

These include the following:

- Using VAE Encode For Inpainting + Inpaint model: Redraw in the masked area, requiring a high denoise value.

- Using VAE Encode + SetNoiseMask + Standard Model: Treats the masked area as noise for the sampler, allowing for a low denoise value.

- Using Inpaint Model Conditioning + Standard Model / Inpainting Model: Similar to the second method when using the standard model, but allows for a low denoise value when using the Inpainting model.

- Using ControlNet Inpainting + Standard Model: Requires a high denoise value, but the intensity of ControlNet can be adjusted to control the overall detail enhancement.

Preparation

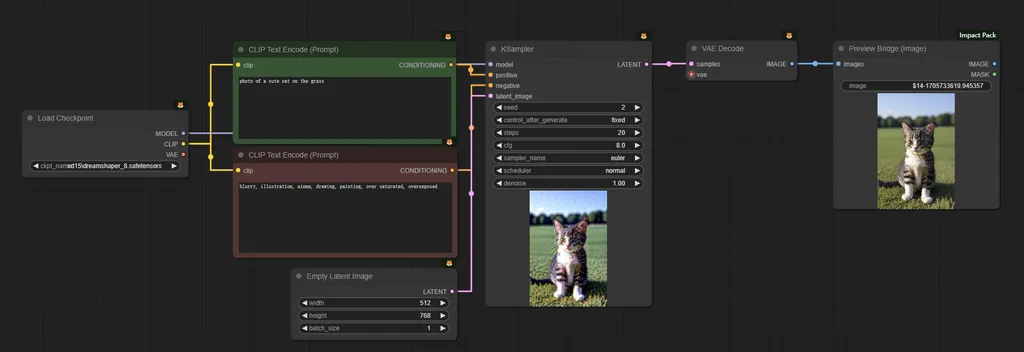

Let's illustrate this with an example of drawing a tiger from a cat:

This is a basic image generation process, aiming to generate a picture of a cute cat.

Positive prompts:

photo of a cute cat on the grass

Negative prompts:

blurry, illustration, anime, drawing, painting, over saturated, overexposed

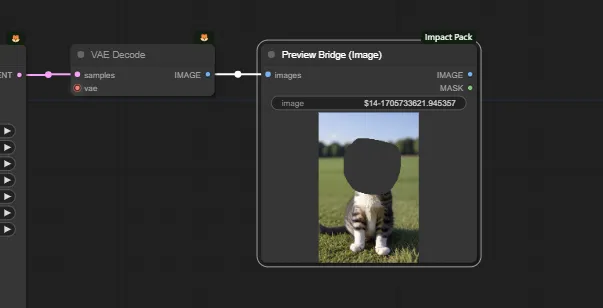

Then, using the above methods to transform the cat into a tiger, first set the Mask to indicate the area to be redrawn.

Right-click on the image. Choose Open in MaskEditor from the context menu, paint the area to be redrawn, then click Save to node. All methods will use the generated cat image and the painted Mask.

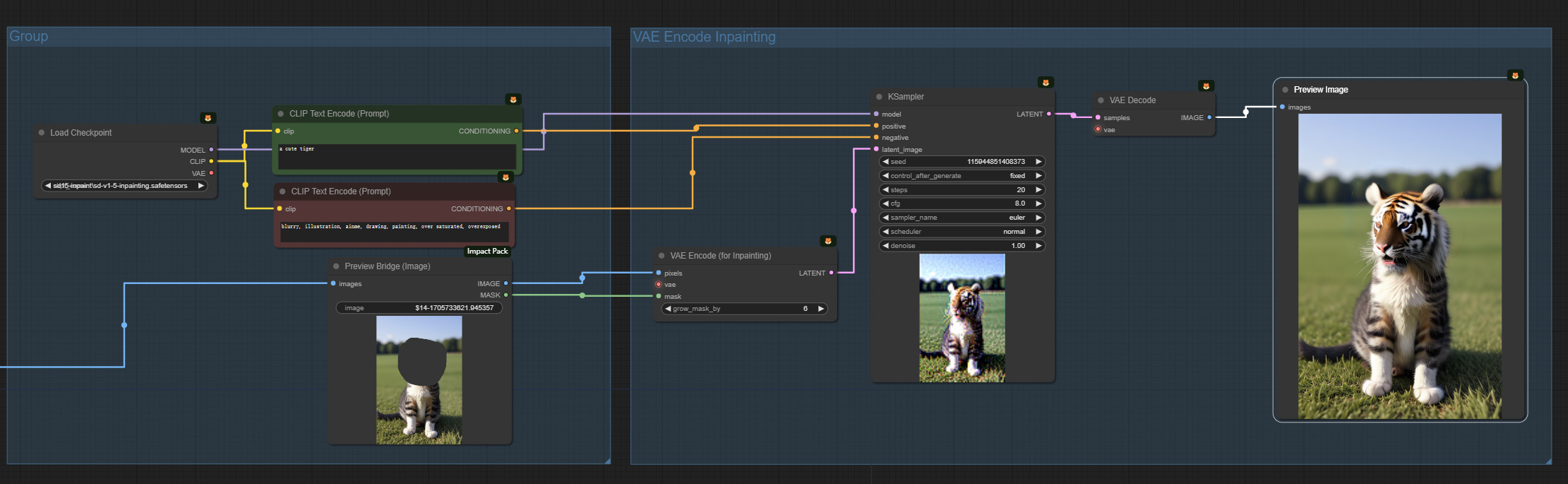

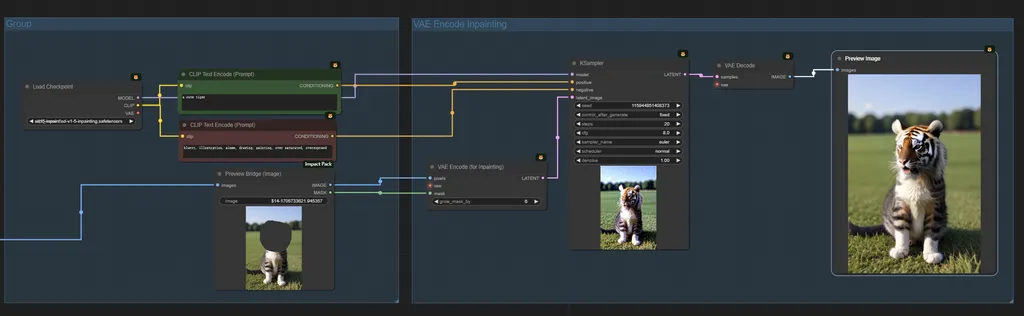

Using VAE Encode For Inpainting + Inpaint Model

Model used: SD inpainting model: sd-v1-5-inpainting

Positive prompt: a cute tiger

Use the VAE Encode For Inpainting node to pass the redrawn image and Mask.

Note that a high denoise value of 1 is used here. Lowering the denoise will make the redrawn area blurry.

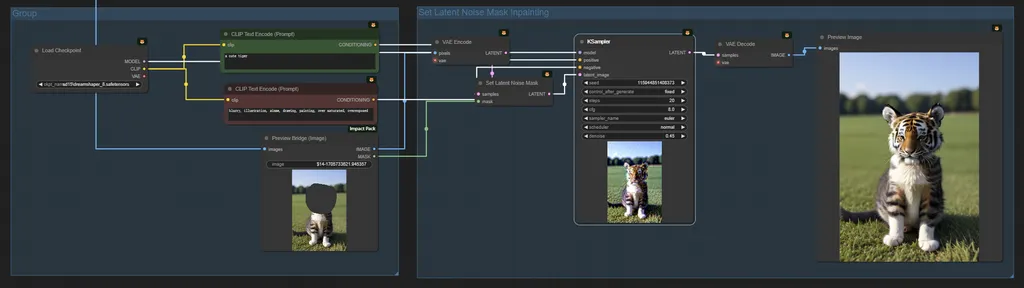

Using VAE Encode + SetNoiseMask + Standard Model

Model used: SD standard model: dream shaper 8

Positive prompt: a cute tiger

Use the VAE Encode + SetNoiseMask node to pass the redrawn image and Mask, using Set Latent Noise Mask to attach the Inpainting Mask to the Latent sample.

Note that a low denoise value of 0.45 is used here. Adjusting the denoise value can tune the image - lower values result in a more cat-like appearance, higher values in a more tiger-like appearance. Too high can cause image incoherence.

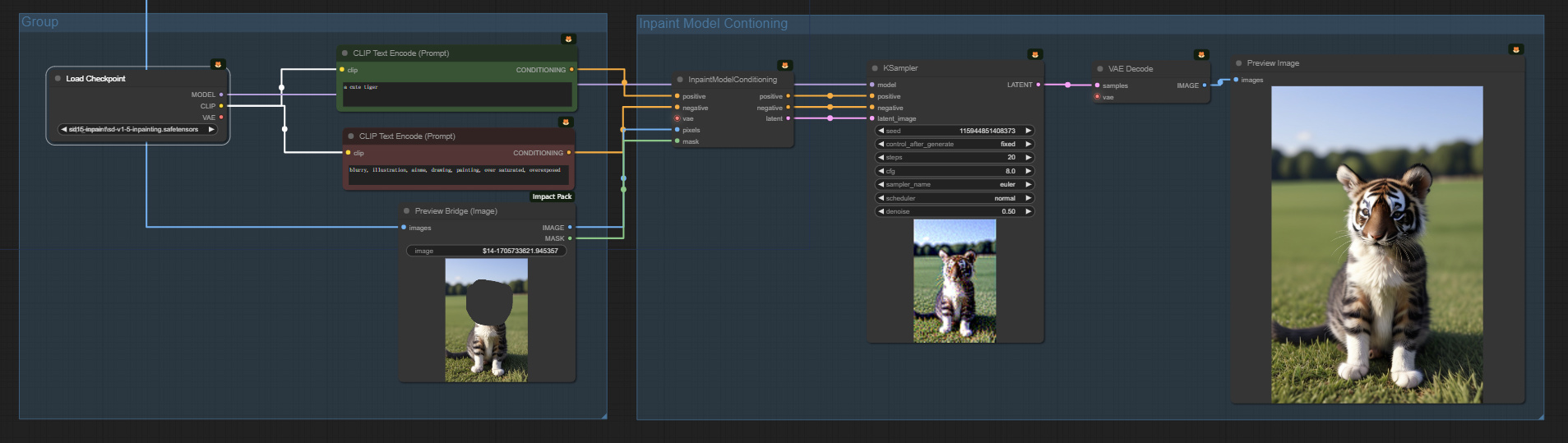

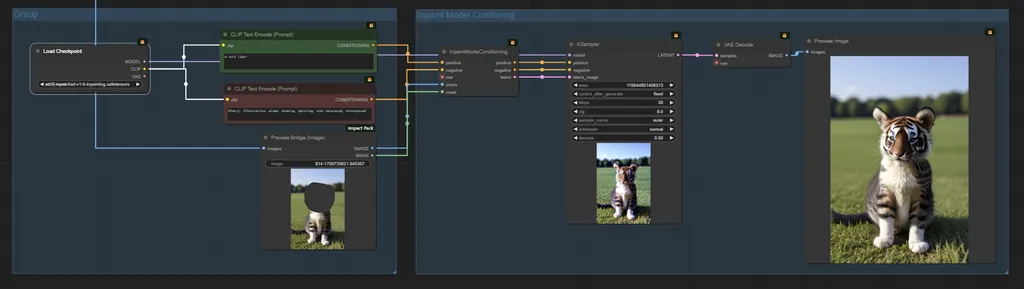

Using Inpaint Model Conditioning + Standard Model / Inpainting Model

The effect is the same as "Using VAE Encode + SetNoiseMask + Standard Model" when using the standard model. The focus here is on using the Inpainting model.

Model used: SD inpainting model: sd-v1-5-inpainting

Positive prompt: a cute tiger

Use the Inpaint Model Conditioning node to pass the redrawn image and Mask.

Note that a low denoise value of 0.45 is used here. Adjusting the denoise can achieve similar results without the problem of image incoherence.

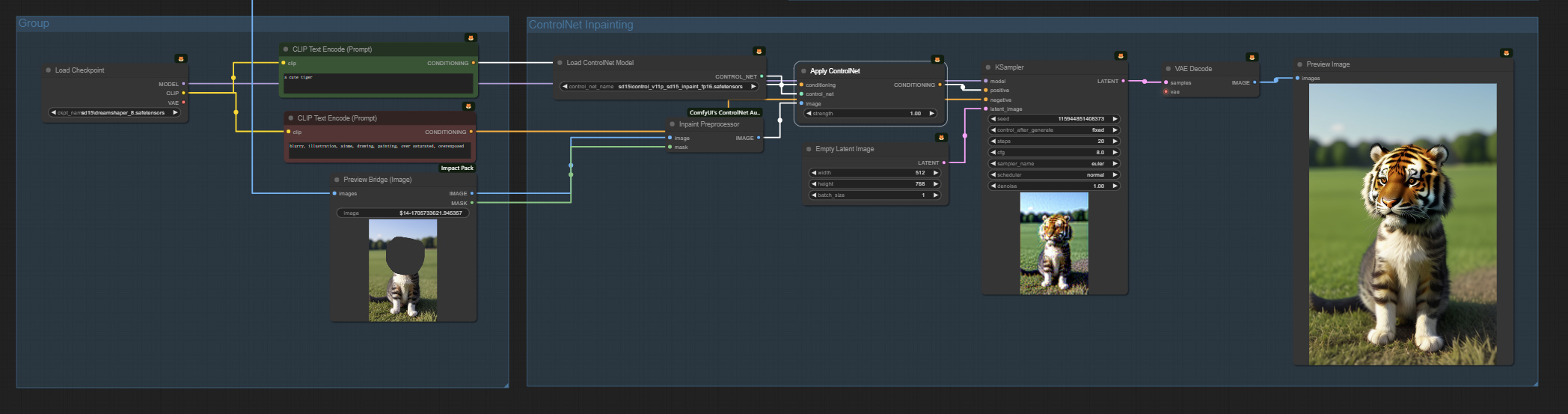

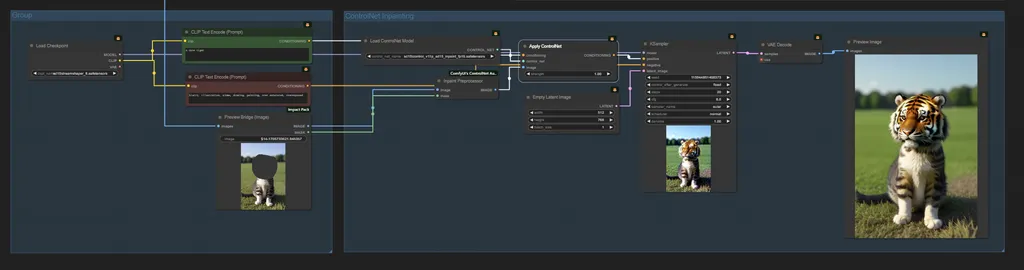

Using ControlNet Inpainting + Standard Model

Model used: SD standard model: dream shaper 8

Positive prompt: a cute tiger

You can create workflows like any other ControlNet. However, you use the Inpaint Preprocessor node. It takes a pixel image and inpaint mask as input and outputs to the Apply ControlNet node.

Note that the denoise value can be set high at 1 without sacrificing global consistency.

Additionally, you can introduce details by adjusting the strength of the Apply ControlNet node.

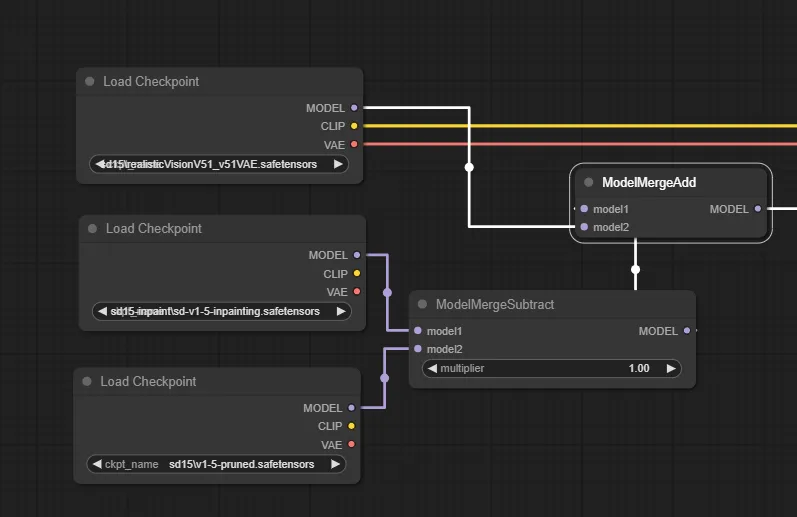

Converting Any Standard SD Model to an Inpaint Model

Subtract the standard SD model from the SD inpaint model, and what remains is inpaint-related. Then add it to other standard SD models to obtain the expanded inpaint model.

Outpainting

You can also use a similar workflow for outpainting. The principle of outpainting is the same as inpainting. There is a "Pad Image for Outpainting" node that can automatically pad the image for outpainting, creating the appropriate mask. In this example, the image will be outpainted:

Using the v2 inpainting model and the “Pad Image for Outpainting” node (load it in ComfyUI to see the workflow):

References

Official introduction example: https://comfyanonymous.github.io/ComfyUI_examples/inpaint/

Inpaint for AUTOMATIC1111: https://stable-diffusion-art.com/inpainting/

Inpaint for ComfyUI: https://stable-diffusion-art.com/inpaint-comfyui/#Inpainting_with_a_standard_model

ComfyUI: Inpaint every model: https://www.youtube.com/watch?v=nPYDm-pI0wM

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (1)

- latest (2 years ago)

Node Details

Primitive Nodes (5)

Anything Everywhere (4)

Prompts Everywhere (1)

Custom Nodes (31)

ComfyUI

- KSampler (5)

- VAEDecode (5)

- EmptyLatentImage (2)

- CLIPTextEncode (4)

- VAELoader (1)

- PreviewImage (4)

- CheckpointLoaderSimple (2)

- VAEEncodeForInpaint (1)

- SetLatentNoiseMask (1)

- VAEEncode (1)

- ControlNetLoader (1)

- InpaintModelConditioning (1)

- ControlNetApply (1)

- PreviewBridge (1)

- InpaintPreprocessor (1)

Model Details

Checkpoints (2)

sd15-inpaint\sd-v1-5-inpainting.safetensors

sd15\dreamshaper_8.safetensors

LoRAs (0)