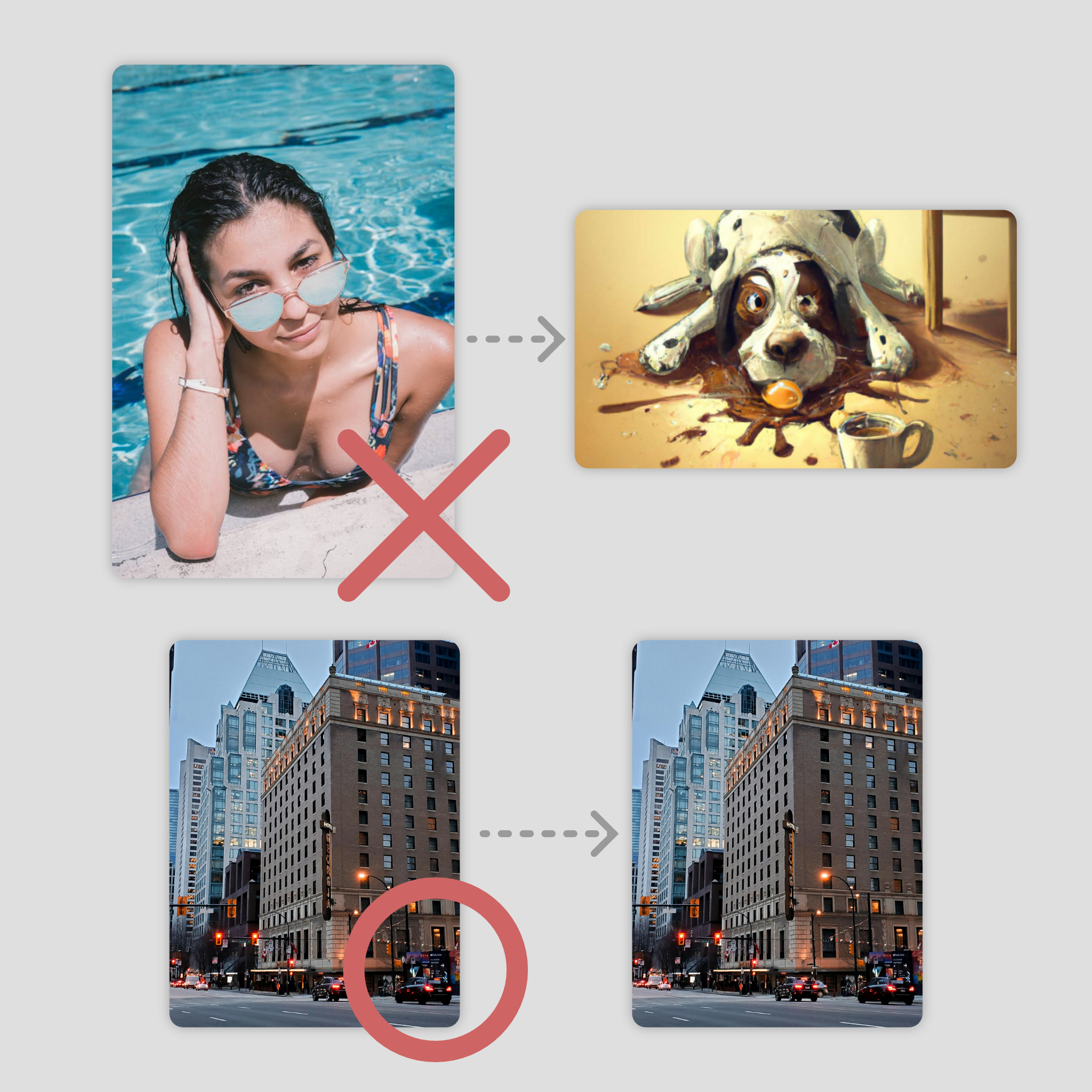

Unsafe image content detected with LLM

5.0

0 reviews4

2.6K

408

2

Description

What's this?

- This is a workflow that replaces sensitive images with the well-known distasteful dog image

- By the way, we are using LLM, but there is hardly any reason to use LLM

How?

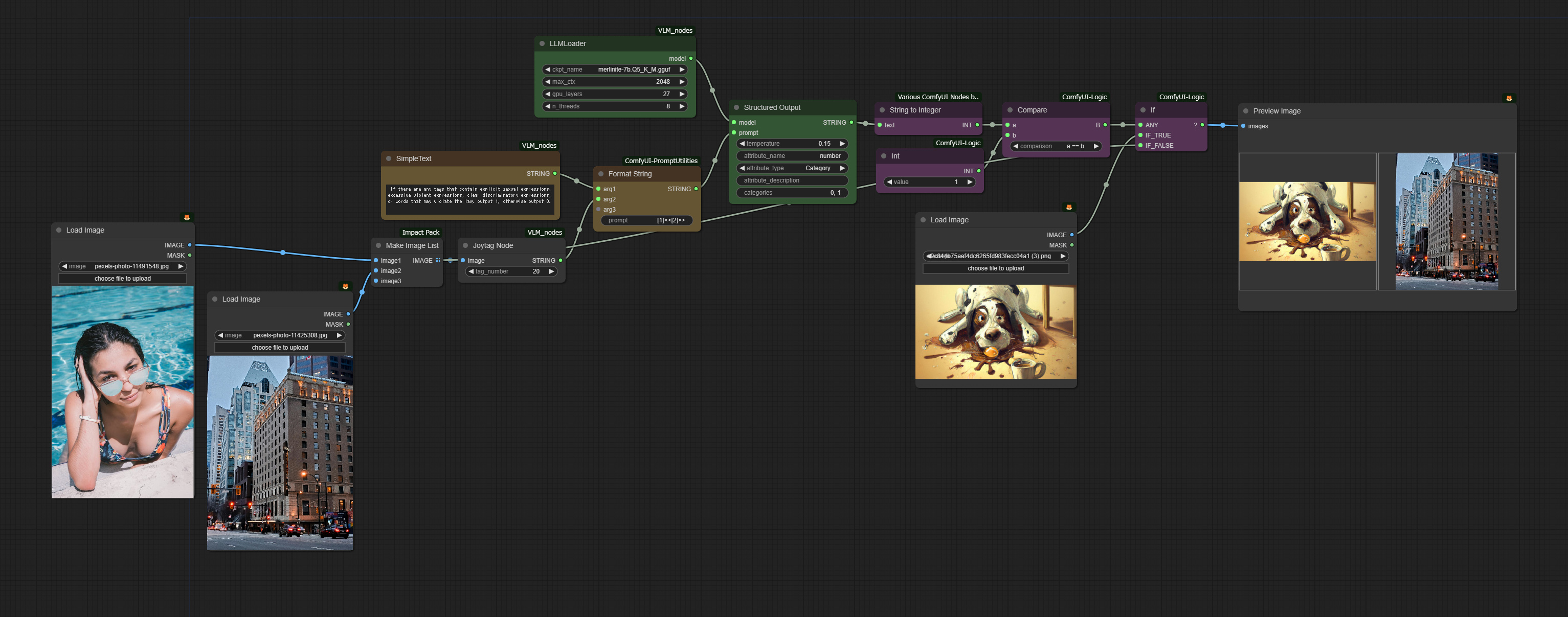

- Generate tags for the image using JoyTag

- Determine whether there are any sensitive tags among them using merlinite-7b

- If there are, replace the image with a dog image

Punchline of the story

- Most people probably noticed, but there’s really no need to use LLM.

- All you have to do is create a tag set with sensitive tags and compare it with what JoyTag generated.

- You might be able to use it to identify images other than sensitive ones if you change the prompt…

- If you want to waste computational resources, by all means, give it a try😏

Discussion

(No comments yet)

Loading...

Resources (1)

安全でない画像コンテンツが検出されました.png (334.8 kB)

Reviews

No reviews yet

Versions (1)

- latest (2 years ago)

Node Details

Primitive Nodes (1)

JWStringToInteger (1)

Custom Nodes (11)

ComfyUI

- PreviewImage (1)

- LoadImage (2)

- Compare (1)

- If ANY execute A else B (1)

- Int (1)

- PromptUtilitiesFormatString (1)

- LLMLoader (1)

- SimpleText (1)

- Joytag (1)

- StructuredOutput (1)

Model Details

Checkpoints (0)

LoRAs (0)