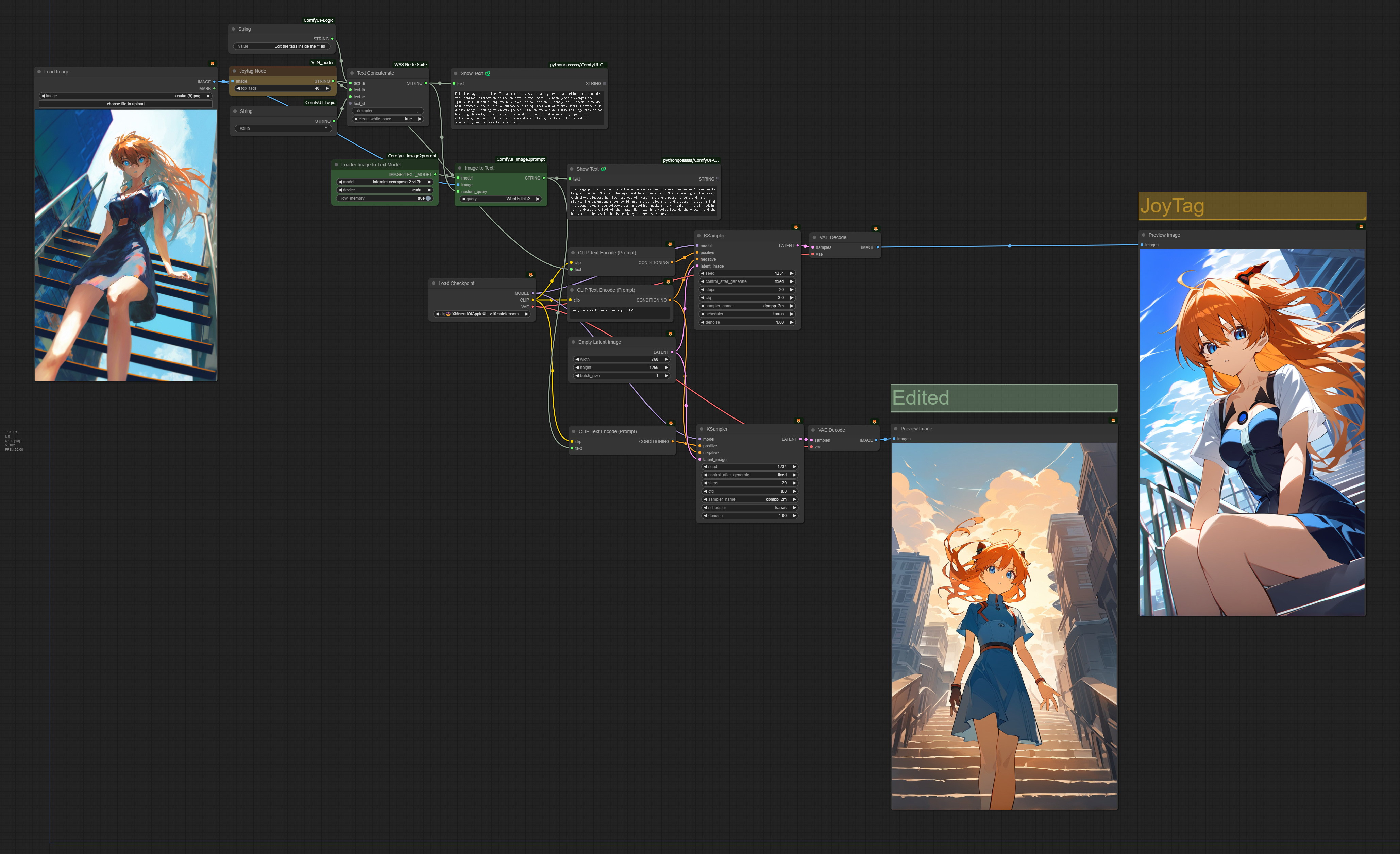

Edit the tags interrogated by JoyTag using MLLM

5.0

0 reviewsDescription

Concept

- Most MLLMs are serious, so even if you ask them to describe an image, they may not know the names of anime characters, and they completely ignore NSFW content.

- On the other hand, while WD14-tagger and JoyTag provide quite specific tags, they are just listing words and cannot fully explain the context.

- Therefore, by asking the MLLM to describe the “input image” using the “tags interrogated by JoyTag”, you can combine the best of both.

Custom Node

Prompt

Edit the tags inside the “” as much as possible and generate a caption that includes the location information of the objects in the image. Aside

I tried Llava1.6 but it didn’t work well. I think InternLM-XComposer2-VL is overwhelmingly superior in understanding the prompt.

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (1)

- latest (2 years ago)

Node Details

Primitive Nodes (0)

Custom Nodes (20)

ComfyUI

- CLIPTextEncode (3)

- KSampler (2)

- VAEDecode (2)

- EmptyLatentImage (1)

- CheckpointLoaderSimple (1)

- PreviewImage (2)

- LoadImage (1)

- LoadImage2TextModel (1)

- Image2Text (1)

- String (2)

- ShowText|pysssss (2)

- Joytag (1)

- Text Concatenate (1)

Model Details

Checkpoints (1)

😎-XL\heartOfAppleXL_v10.safetensors

LoRAs (0)