ComfyUI workflow for Flux (simple)

4.0

6 reviewsDescription

It is a simple workflow of Flux AI on ComfyUI.

EZ way, kust download this one and run like another checkpoint ;) https://civitai.com/models/628682/flux-1-checkpoint-easy-to-use

Check out more detailed instructions here: https://maitruclam.com/flux-ai-la-gi/

Just 20GB and no more download alot of thing.

it was a bug when i tried to run flux on A1111 but i was finally able to use it on ComfyUI :V

Old ver:

You will need at least 30 GB to use them :)

***

If you are a newbie like me, you will be less confused when trying to figure out how to use Flux on ComfyUI.

In addition to this workflow, you will also need:

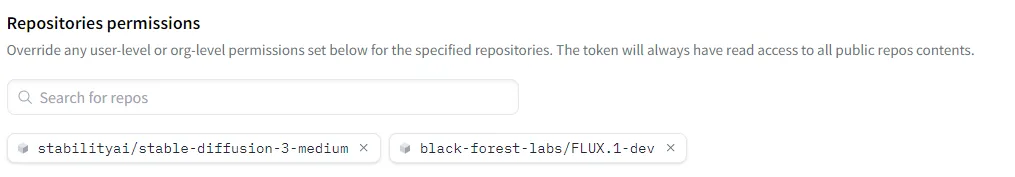

Download Model:

1. Model: flux1-dev.sft: 23.8 GB

Link: https://huggingface.co/black-forest-labs/FLUX.1-dev/tree/main

Location: ComfyUI/models/unet/

Download CLIP:

1. t5xxl_fp16.safetensors: 9.79 GB

2. clip_l.safetensors: 246 MB

3. (optional if your machine has less than 32GB of TvT ram) t5xxl_fp8_e4m3fn.safetensors: 4.89 GB

Link: https://huggingface.co/comfyanonymous/flux_text_encoders/tree/main

Location: ComfyUI/models/clip/

Download VAE:

1. ae.sft: 335 MB

Link: https://huggingface.co/black-forest-labs/FLUX.1-schnell/blob/main/ae.safetensors

Location: ComfyUI/models/vae/

If you are using an Ubuntu VPS like me, the command is as simple as this:

# Download t5xxl_fp16.safetensors to the directory ComfyUI/models/clip/

wget -P /home/ubuntu/ComfyUI/models/clip/ https://huggingface.co/comfyanonymous/flux_text_encoders/resolve/main/t5xxl_fp16.safetensors

# Download tp clip_l.safetensors to ComfyUI/models/clip/

wget -P /home/ubuntu/ComfyUI/models/clip/ https://huggingface.co/comfyanonymous/flux_text_encoders/resolve/main/clip_l.safetensors

# (Optional) Download t5xxl_fp8_e4m3fn.safetensors to ComfyUI/models/clip/

wget -P /home/ubuntu/ComfyUI/models/clip/ https://huggingface.co/comfyanonymous/flux_text_encoders/resolve/main/t5xxl_fp8_e4m3fn.safetensors

# Download ae.sft to ComfyUI/models/vae/

wget -P /home/ubuntu/ComfyUI/models/vae/ https://huggingface.co/black-forest-labs/FLUX.1-schnell/resolve/main/ae.safetensors

For the model, you will need to learn how to generate Huggingface Access Tokens and add them to download and use like this:

I don't know much about them so you can find out more.

Why don't I make tutorial for Windows 10, 11 or XP? What do you expect from a Mario 64 laptop :)

Original tutorial: https://comfyanonymous.github.io/ComfyUI_examples/flux/

Note: It works well with FLUX.1-Turbo-Alpha, LORA human face. 👤✨

Useful and FREE resources:

❤️Free server to make art with Flux: Shakker and Tensor Art and Sea Art

✨ More FLUX LORA? List and detailed description of each LORA I implement here: https://maitruclam.com/lora📚

🆕 First time using FLUX? Explanation and tutorial with A1111 forge offline and Comfy UI here: https://maitruclam.com/flux-ai-la-gi/🌐

🛠️ How to train your LORA with Flux? My detailed instructions are here: https://maitruclam.com/training-flux/📚

❤️ Donate me (I would be really surprised if you did that! 😄): https://maitruclam.com/donate

Find me / Contact for work on:

📱 Facebook: @maitruclam4real

💬 Discord: @maitruclam

🌐 Web: maitruclam.com

Discussion

(No comments yet)

Loading...

Resources (2)

Reviews

No reviews yet

Versions (2)

- latest (2 years ago)

- v20240801-174718

Node Details

Primitive Nodes (0)

Custom Nodes (12)

ComfyUI

- SamplerCustomAdvanced (1)

- BasicGuider (1)

- KSamplerSelect (1)

- VAEDecode (1)

- RandomNoise (1)

- UNETLoader (1)

- VAELoader (1)

- EmptyLatentImage (1)

- CLIPTextEncode (1)

- BasicScheduler (1)

- DualCLIPLoader (1)

- SaveImage (1)

Model Details

Checkpoints (0)

LoRAs (0)