Vid2Vid Style Transfer with IPA & Hotshot XL

5.0

2 reviewsDescription

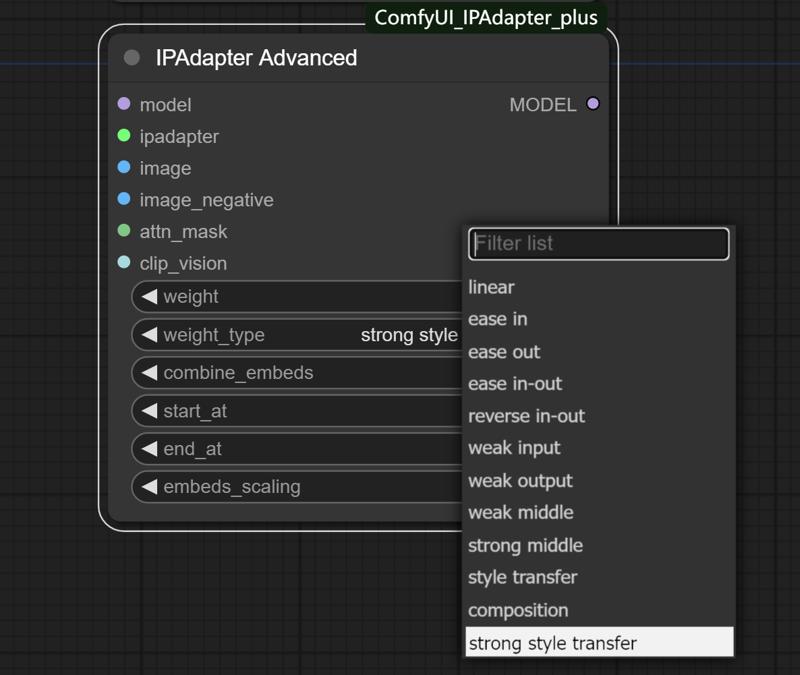

Found that the "Strong Style Transfer" of IPAdapter performs exceptionally well in Vid2Vid.

Just update your IPAdapter and have fun~!

Checkpoint I used:

Any turbo or lightning model will be good, like Dreamshaper XL Turbo or lightning, Juggernaut XL lightning etc. (Remember to check the required samplers and lower your CFG)

HotshotXL download:

Download: https://huggingface.co/Kosinkadink/HotShot-XL-MotionModels/tree/main

Context_length: 8 is good

And I recommend you take a look at Inner_Reflections_AI 's article, which provides very detailed explanations: https://civitai.com/articles/2601

A Lower Memory use vae:

Download: https://civitai.com/models/140686?modelVersionId=155933

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (1)

- latest (2 years ago)

Node Details

Primitive Nodes (1)

Anything Everywhere3 (1)

Custom Nodes (36)

- ADE_AnimateDiffLoaderGen1 (1)

- ADE_StandardUniformContextOptions (1)

ComfyUI

- ControlNetApplyAdvanced (3)

- VAELoader (1)

- CLIPTextEncode (2)

- VAEEncode (2)

- KSampler (2)

- VAEDecode (2)

- LoraLoader (1)

- UpscaleModelLoader (1)

- CheckpointLoaderSimple (1)

- ImageScaleBy (1)

- ImageUpscaleWithModel (1)

- ImageScale (2)

- LoadImage (1)

- AIO_Preprocessor (3)

- IPAdapterUnifiedLoader (1)

- IPAdapterAdvanced (1)

- ControlNetLoaderAdvanced (3)

- VHS_VideoCombine (4)

- VHS_LoadVideoPath (1)

- ColorMatch (1)

Model Details

Checkpoints (1)

dreamshaperXL_v21TurboDPMSDE.safetensors

LoRAs (1)

OIL_ON_CANVAS_v3.safetensors