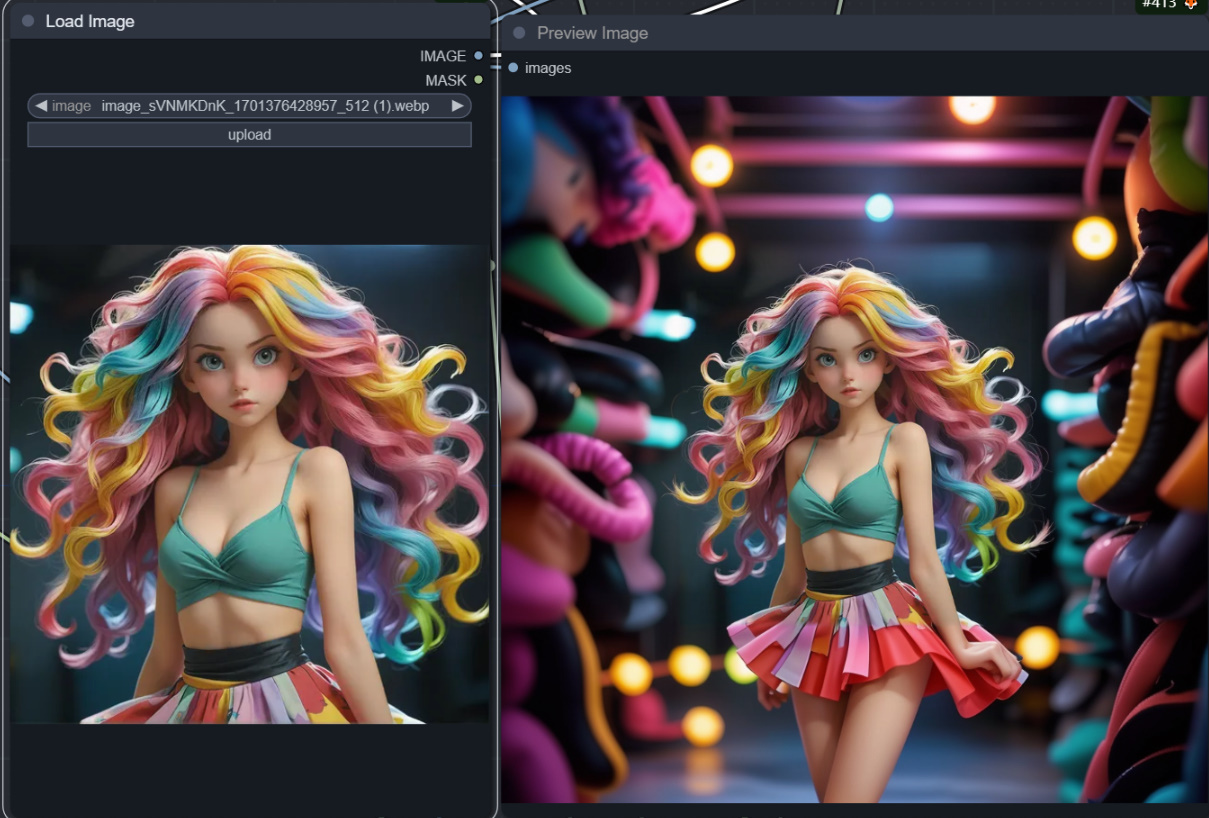

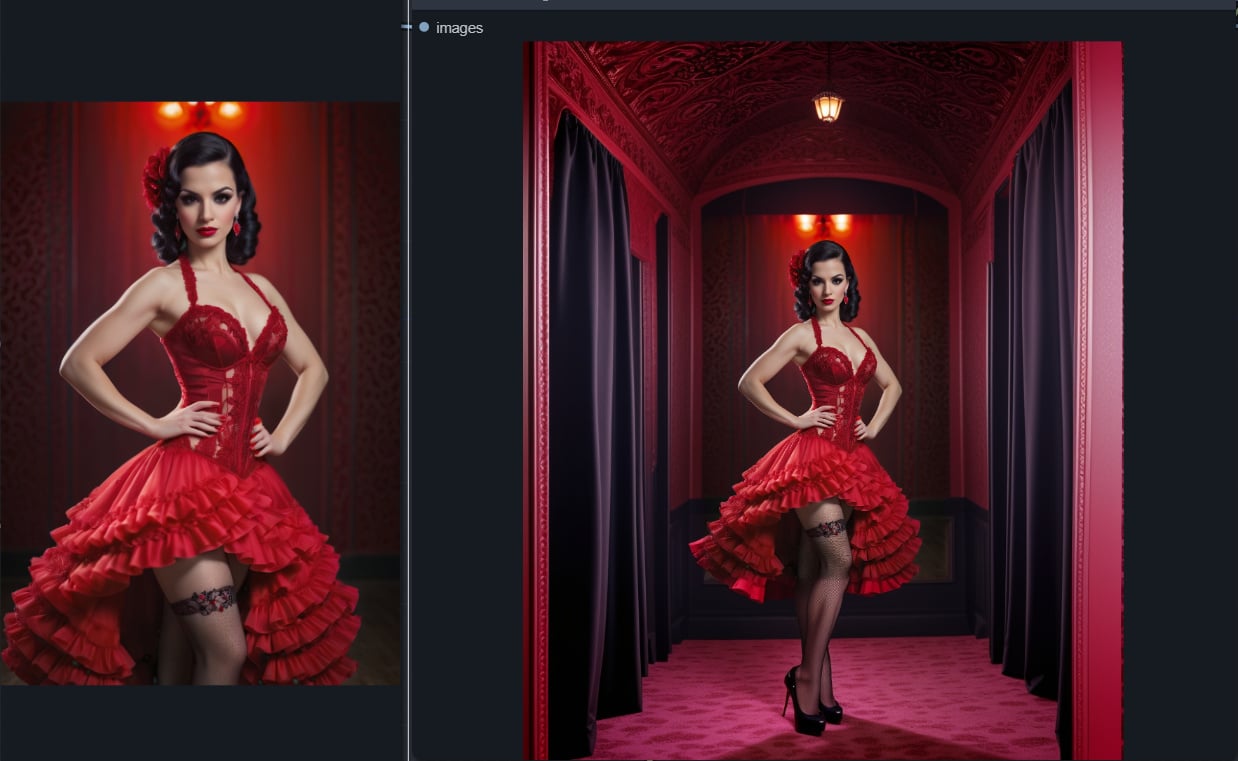

extended image (No prompts needed)

4.7

10 reviewsDescription

工作流已经更新,做了很多改进和升级,它基于XL模型,我推荐使用新的工作流。

The workflow has been updated with a lot of improvements and upgrades, it is based on the XL model and I recommend using the new workflow.

新工作流的链接:

Link to the new workflow:

https://openart.ai/workflows/hornet_splendid_53/extended-outpaintxl-update/RbTrDOJifp89TcHNjo6Z

--------------------------------------------------------------------------------------------------------------------

The stock images I used in the demo are all from the author #NeuraLunk, his images are beautiful and if you like his work, you can find him at the URL below.

https://openart.ai/workflows/profile/neuralunk?sort=latest

What this workflow does

extended image

How to use this workflow

Just drag and drop the image in, no need to use the prompt.

How it works

Use controlnet's inpaint model to make guesses about the extensions.

At the same time, the style model is used to reference the picture, so that controlnet won't guess wildly.

The style model can be either coadapter or IPAdapter, they have different ways to reference the style. I prefer coadapter for extending images.

I highly recommend the realisticVisionV60B1VAE 3.97G model for its great extended image results!

Model Download

CHECKPOINT

https://civitai.com/models/4201/realistic-vision-v60-b1 3.97G

Place it in the ComfyUI\models\checkpoints

coadapter

https://huggingface.co/TencentARC/T2I-Adapter/blob/main/models/coadapter-style-sd15v1.pth

Place it in the ComfyUI\models\style_models

IPAdapter

https://huggingface.co/h94/IP-Adapter/tree/main/models

Place it in the ComfyUI\custom_nodes\ComfyUI_IPAdapter_plus\models

IPAdapter clip vision

https://huggingface.co/h94/IP-Adapter/tree/main/models/image_encoder

Place it in the ComfyUI_windows_portable\ComfyUI\models\clip_vision\SD1.5

coadapter clip vision

https://huggingface.co/openai/clip-vit-large-patch14/blob/main/pytorch_model.bin

Place it in the ComfyUI_windows_portable\ComfyUI\models\clip_vision\SD1.5

Please make sure that all models are adapted to the SD1.5 model.

If you have any questions, please add my WeChat: knowknow0

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (4)

- latest (2 years ago)

- v20231219-081903

- v20231219-081737

- v20231219-072611

Node Details

Primitive Nodes (12)

IPAdapterApply (1)

Image scale to side (3)

Note (4)

Reroute (4)

Custom Nodes (44)

- Mask Contour (1)

ComfyUI

- StyleModelLoader (1)

- CLIPVisionEncode (1)

- CLIPVisionLoader (2)

- StyleModelApply (1)

- CLIPTextEncode (4)

- ControlNetLoader (1)

- SetLatentNoiseMask (2)

- VAEEncode (2)

- ImageToMask (1)

- GrowMask (2)

- MaskToImage (4)

- ImagePadForOutpaint (1)

- VAEDecode (2)

- PreviewImage (3)

- InvertMask (3)

- KSampler (2)

- LoadImage (1)

- CheckpointLoaderSimple (1)

- InpaintPreprocessor (1)

- IPAdapterModelLoader (1)

- ScaledSoftControlNetWeights (1)

- ACN_AdvancedControlNetApply (1)

- Paste By Mask (2)

- Mask Erode Region (1)

- Mask Gaussian Region (1)

- Mask Dilate Region (1)

Model Details

Checkpoints (1)

realisticVisionV60B1_v60B1VAE.safetensors

LoRAs (0)