Change Background ++ (Redraw background, clothes, hair, face…and Generate foregrounds)

5.0

4 reviewsDescription

多功能换背景工作流(更新V329版本):

1、根据提示词或参考图为照片主体(人、动物、产品等)生成背景;

2、局部重绘功能,例如换衣服、换发型、替换物品等等;

3、提供了脸部修复、手部修复及换脸功能;

4、可通过MaskEditor绘制蒙版生成前景;

5、可选择蒙版以及深度图的生成方式,以及调节全局的ControlNet、IPAdapter以及Mask的影响力;

请切换成英文语言再加载工作流,否则很多节点的中文标题会丢失。

版本更新:

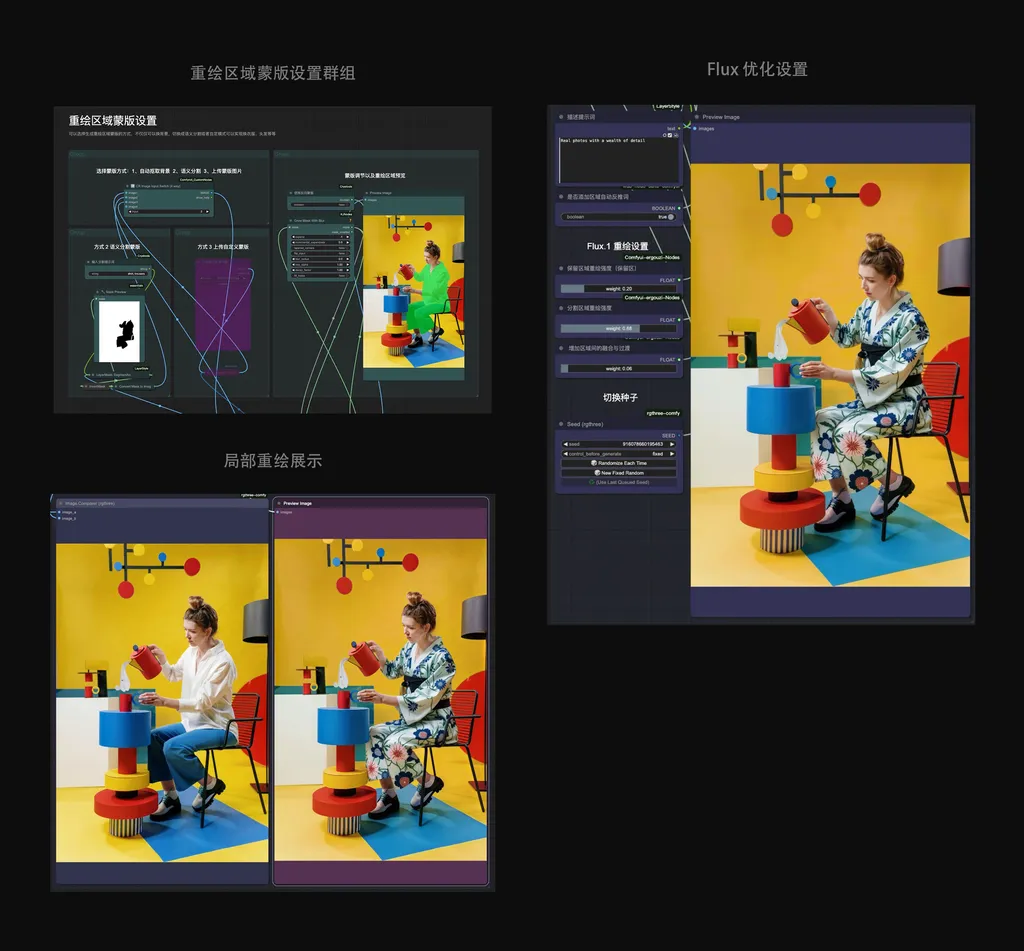

(1031) 主要增加了蒙版编辑群组,有三种方式可以选择,分别是自动抠取背景、通过语义分割获取蒙版以及上传自定义蒙版。通过语义分割节点可以获取局部区域蒙版,实现换服装、发型、物品等等操作。另外增加了使用Flux GGUF模型对重绘区域进一步优化的流程,以及微调了一下操作界面。

(1016) 更新了节点版本。原来的手部修复群组更换成使用Flux GGUF模型来进行修复。

(1004) 重新调整了界面布局,对深度图、高清放大功能进行了优化。

(0803) 使用了IPAdapter Plus新加入的ClipVision Enhancer节点,可以获取更好的背景细节。另外增加了一些效率节点以及调整了一些参数设置。去掉了IC-Light部分,可以另外使用Change Light工作流进行打光。

(0610) 还原细节功能改成复原主体,复原主体后可继续对脸部和手部进行修复。抠图节点替换成BiRefNet,效果很棒!

(0609) 修复偶尔出现边框的问题,提升背景生成效果。

(0607) 增加还原主体细节节点(需要时启用)

(0606) 主要优化了前景生成效果

(0604) 稍微调整了下界面布局,替换了一些节点以及增加了几个控制器

(0602) 优化IC-Light打光,提供了原图色彩迁移功能开关,可以还原原本的色彩

Multi-function background change workflow update V328 version:

- Generate backgrounds for the subjects of photos, such as people, animals, and products, based on the prompt words or reference images;

- Local redrawing function, such as changing clothes, hairstyle, replacing items, etc;

- Provides functions such as face repair, hand repair, and face replacement;

- The foreground can be generated by drawing a mask with MaskEditor;

- You can choose the generation method of the mask and depth map, and adjust the influence of the global ControlNet, IPAdapter, and Mask;

Version update:

(1031) The main addition is the mask editing group, which has three options: automatic background extraction, semantic segmentation for mask acquisition, and uploading custom masks. Through the semantic segmentation node, it is possible to obtain local area masks to achieve operations such as changing clothing, hairstyles, and items. In addition, the process of further optimizing the redrawing area using the Flux GGUF model has been added, and the user interface has been fine-tuned.

(1016) updated the node version. The original hand repair group was replaced with the Flux GGUF model for repair.

(1004) The interface layout has been restructured, and the depth map and high-definition zoom-in features have been optimized.

(0803) The ClipVision Enhancer node, newly added to the IPAdapter Plus, is used to obtain better background details. In addition, some efficiency nodes have been added and some parameter settings have been adjusted. The IC-Light part has been removed, and you can use the Change Light workflow for lighting separately.

(0610) The function of restoring details has been changed to restoring the main body, which can continue to repair the face and hands after restoration. The image-based node is replaced with BiRefNet, and the effect is great!

(0609) Fixed the occasional problem of borders, and improved the background generation effect.

(0607) Enable when adding the restore subject detail node is required

(0606) Mainly optimized the foreground generation effect

(0604) Slightly adjusted the interface layout, replaced some nodes and added several controllers

(0602) Optimize IC-Light lighting, provide a switch for original color transfer function, which can restore the original color

由于工作流不断地更新迭代,操作界面与目前的教程视频里展示的差异较大,因此教程视频仅作为参考。

Due to the continuous update and iteration of the workflow, the operation interface is quite different from the current tutorial video, so the tutorial video is only used as a reference.

How-to video (V3 version)

https://youtu.be/42b5DBCzPX0?si=zy1Jz7ExuF9hgITf

https://www.bilibili.com/video/BV1MU411o7et/?vd_source=c52b6f7c72230aea1ae80463f968691a

How-to video (V2 version)

https://www.bilibili.com/video/BV1Cx4y167fc/?vd_source=c52b6f7c72230aea1ae80463f968691a

https://www.youtube.com/watch?v=yubqVCOf3bo

Discussion

(No comments yet)

Loading...

Resources (3)

Reviews

No reviews yet

Versions (31)

- latest (7 months ago)

- v20250131-080800

- v20241119-051847

- v20241116-060936

- v20241031-152314

- v20241016-154703

- v20241016-032059

- v20241004-064103

- v20241003-082029

- v20240928-031937

- v20240826-072329

- v20240803-062601

- v20240802-161323

- v20240622-044258

- v20240617-094320

- v20240614-061839

- v20240610-142850

- v20240609-063012

- v20240607-125124

- v20240604-031135

- v20240603-061324

- v20240531-040630

- v20240530-124040

- v20240506-075753

- v20240502-044557

- v20240430-032134

- v20240428-101014

- v20240428-100948

- v20240425-170009

- v20240424-144742

- v20240423-150355

Node Details

Primitive Nodes (478)

ACN_AdvancedControlNetApply_v2 (11)

ACN_ScaledSoftControlNetWeights (3)

Any Switch (rgthree) (17)

DF_Float (19)

DF_Int_to_Float (3)

DF_Integer (7)

DF_Text_Box (3)

DepthAnythingV2Preprocessor (1)

DownloadAndLoadFlorence2Model (1)

Fast Groups Bypasser (rgthree) (6)

Fast Groups Muter (rgthree) (1)

Florence2Run (3)

GetNode (168)

IPAdapterClipVisionEnhancer (2)

Image Comparer (rgthree) (1)

Int-🔬 (1)

Label (rgthree) (15)

LayerMask: BiRefNetUltraV2 (1)

LayerMask: LoadBiRefNetModelV2 (1)

Lora Loader Stack (rgthree) (2)

MaskBatchComposite(FaceParsing) (1)

Note (3)

Note Plus (mtb) (6)

Primitive boolean [Crystools] (6)

Primitive float [Crystools] (1)

Primitive integer [Crystools] (8)

Reroute (7)

Seed (rgthree) (4)

SetNode (101)

Switch any [Crystools] (13)

XIS_Float_Slider (17)

XIS_INT_Slider (1)

XIS_Label (41)

XIS_PromptsWithSwitches (1)

easy isMaskEmpty (2)

Custom Nodes (300)

- ImageEffectsAdjustment (1)

- CM_FloatBinaryOperation (1)

- CM_FloatUnaryOperation (1)

- CR Conditioning Input Switch (2)

- CR String To Combo (2)

- CR Color Panel (7)

- CR Image Input Switch (4 way) (2)

ComfyUI

- CLIPTextEncode (10)

- VAEDecode (5)

- ControlNetLoader (6)

- PreviewImage (32)

- EmptyLatentImage (1)

- KSampler (5)

- InvertMask (9)

- LatentUpscaleBy (2)

- CLIPSetLastLayer (1)

- PerturbedAttentionGuidance (3)

- MaskToImage (9)

- ImageBlend (1)

- ConditioningSetMask (2)

- GrowMask (5)

- MaskComposite (6)

- ImageScale (2)

- UpscaleModelLoader (2)

- SetLatentNoiseMask (2)

- VAEEncode (2)

- ImageToMask (8)

- DifferentialDiffusion (2)

- ImageCompositeMasked (1)

- LoadImage (6)

- EmptyImage (1)

- CheckpointLoaderSimple (1)

- UNETLoader (1)

- DualCLIPLoader (1)

- VAELoader (1)

- easy imageColorMatch (1)

- easy cleanGpuUsed (2)

- easy boolean (4)

- easy promptList (1)

- easy int (1)

- MaskPreview+ (9)

- SimpleMath+ (23)

- MaskBlur+ (6)

- ImageResize+ (13)

- ImageCASharpening+ (3)

- GetImageSize+ (5)

- ImageCompositeFromMaskBatch+ (5)

- ImageCrop+ (1)

- BboxDetectorCombined_v2 (1)

- ImpactSimpleDetectorSEGS (3)

- SegsToCombinedMask (3)

- ImpactSwitch (1)

- ImpactStringSelector (2)

- ImpactImageBatchToImageList (1)

- SAMLoader (2)

- PreviewBridge (2)

- UltralyticsDetectorProvider (3)

- FaceDetailer (1)

- MaskListToMaskBatch (1)

- LayerColor: ColorAdapter (1)

- LayerUtility: ColorImage V2 (1)

- LayerColor: Brightness & Contrast (3)

- LayerUtility: TextBox (2)

- LayerMask: MaskEdgeUltraDetail V2 (1)

- LayerUtility: ImageBlend V2 (2)

- LayerMask: SegmentAnythingUltra V2 (1)

- DWPreprocessor (1)

- DepthAnythingPreprocessor (1)

- InpaintPreprocessor (3)

- LineArtPreprocessor (1)

- AIO_Preprocessor (1)

- IPAdapterUnifiedLoaderFaceID (1)

- IPAdapterFaceID (1)

- IPAdapterUnifiedLoader (2)

- OutlineMask (2)

- AnyLineArtPreprocessor_aux (2)

- SDXL Prompt Styler (JPS) (1)

- RemapMaskRange (5)

- GrowMaskWithBlur (2)

- ColorMatch (1)

- ConditioningMultiCombine (1)

- CreateGradientFromCoords (1)

- ResizeMask (3)

- MaskBatchMulti (1)

- SplineEditor (1)

- Image To Mask (1)

- ShowText|pysssss (2)

- UltimateSDUpscale (1)

- JWMaskResize (2)

- Bounded Image Crop with Mask (1)

- Text Concatenate (4)

- Image Blend by Mask (2)

- Image Blend (3)

- Logic Boolean Primitive (3)

- Mask Crop Region (2)

Model Details

Checkpoints (1)

realisticVisionV60B1_v51HyperVAE.safetensors

LoRAs (0)