The style change effect same as Magnific.ai for SDXL

5.0

1 reviewsDescription

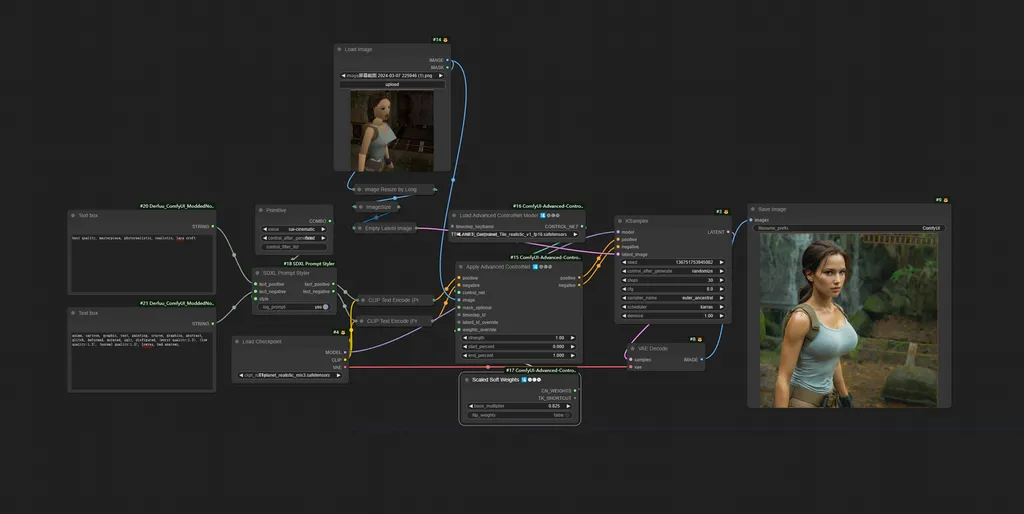

This workflow will bring you the same effect of magnific.ai style change application.

just simple upload the pic as I showed here, use the sdxl tile model I trained here(TTPlanet_SDXL_Tile).

describe the image, generate the image, you will see the amazing result same as magnific.ai!!!!

I have upload the example Lara image for your test, if you want the temple background, put the temple prompt in the text box.

I merge the sdxl style change node in the workflow, so you can play with it for more sytles easily!!!

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (1)

- latest (2 years ago)

Node Details

Primitive Nodes (3)

PrimitiveNode (1)

Text box (2)

Custom Nodes (14)

ComfyUI

- CheckpointLoaderSimple (1)

- CLIPTextEncode (2)

- LoadImage (1)

- EmptyLatentImage (1)

- KSampler (1)

- VAEDecode (1)

- SaveImage (1)

- easy imageSize (1)

- ACN_AdvancedControlNetApply (1)

- ControlNetLoaderAdvanced (1)

- ScaledSoftControlNetWeights (1)

- SDXLPromptStyler (1)

- JWImageResizeByLongerSide (1)

Model Details

Checkpoints (1)

TTplanet_realistic_mix3.safetensors

LoRAs (0)