AnimateDiff Flicker-Free Animation Video Workflow

3.6

17 reviewsDescription

***Thank you for some supporter join into my Patreon. But some people are trying to game the system subscribe and cancel at the same day, and that cause the Patreon fraud detection system mark your action as suspicious activity. Their fraud detection system are going to block this automatically. When you try something shady on a system, then don't come here to blame me , try to leave a comment to bad mouth about it. I am just try to focus on making workflow, improve things and publish on public share with like minded people. I have no time to see every people joining in my Patreon activities.

What this workflow does

Just 1 Workflow! Just 1 ! And you are able to create amazing animation!

👉 Create amazing animation with vid2vid method to generate a unqiue looking style of a new action video. I leaverage the LCM Lora to speed up image frames generation process, but in other way, I am using the IP Adapter to enhance the style of each frames, it's a strong back up support for LCM low sample steps.

How to use this workflow

👉 Use AnimateDiff as the core for creating smooth flicker-free animation. Simply load a source video, and the user create a travel prompt to style the animation, also the user are able to use IPAdapter to skin the video style, such as character, objects, or background.

Tips about this workflow

👉 Workflow Version 10 Walkthrough : https://youtu.be/Sg3KgA3_fPU?si=YFykW0Dkpjz1D2Ii

👉 Workflow Version 8.5 Walkthrough : https://youtu.be/j4BEWNvrYio

👉 Workflow Version 7 Walkthrough : https://youtu.be/md6YzGX741c

👉 Workflow Version 6 Walkthrough : https://youtu.be/g9QYXvVxkkM

👉 Full Tutorial of using this workflow here : https://youtu.be/wFahkr-b7HI

👉 Tutorial Using LCM Checkpoint Mode : https://www.youtube.com/watch?v=AoUlADxSDAg

🎥 Video demo link (optional)

👉 V.10 Demo https://www.youtube.com/shorts/M6vcwuBn14s

👉 V.8.5 Demo https://youtu.be/j4BEWNvrYio

👉 V.7 Demo (With Image Masks Enable for Background) https://www.youtube.com/shorts/3V7bmNRwM2o

👉V.6 Demo (With Mask Background) : https://www.youtube.com/shorts/EO2OQBugHU0

👉Version 6 Demo : https://youtube.com/shorts/uBUaDZopjuw?feature=share

👉 Demo 1 : https://youtube.com/shorts/FJiP8qEzb4Q?feature=share

👉Demo 2 : https://youtube.com/shorts/Pa0Fd7ezb5I?feature=share

👉Demo 3 : https://youtube.com/shorts/LUn2LG0LW38?feature=share

👉Demo 4 (Using Dreamshaper 8 LCM) : https://www.youtube.com/shorts/w0xROu1TqfM

👉Demo 5 (AnimateDiff Evolution From Normal To Enhance Detail) : https://www.youtube.com/shorts/WINY9ODRhm8

Updates:

Version: 2024-01-18 (Version 10)

- Add GET/SET Nodes making the diagram more clean

- Fixed the Version 7 SEG group for remove background.

- 2 IPAdapter group, one for single image, another for mutli-images IPA

Version: 2024-01-10

Explainor video : https://youtu.be/j4BEWNvrYio

- Add IPAadpter Face Plus V2 feature.

- 3 Groups of ControlNet, can be optional to 2 ControlNet base on your need.

- Minor fix on nodes.

Version: 2023-12-24

- A little clean up for Bypass nodes, some nodes that don't need anymore.

- Image Mask Group for video background are going remove in this work, and it will going to create in another AnimateDiff new workflow. After test, better result with solid background color source video.

Version: 2023-12-19 (V.7)

- Add Image Mask Group for detect character and remove background before LineArt ControlNet.

This group are suitable for animation with character focus, and use IPAdapter to stylize the animation background.

Version: 2023-12-14

- Fix slow loading time on OpenPose ControlNet

We are updating this part using DW Pose Preprocessor instead of Openpose Preprocessor. Because DW Pose are able to improve the loading image frames time way better than Openpose. (I have tested with 700 frames video.)

Second reason, DWPose are able to detect more detail on the hands , and face of a character.

Version : 2023-12-13 (2)

- Fixed Detailer Setting for LCM , please be aware the Sampling Method MUST be the same with the KSampler of AnimateDiff, and the "Detailer For AnimateDiff (SEGS/pipe)". If you are using other Sampling Method, you must change both at the same sample name, same seed number, and CfG.

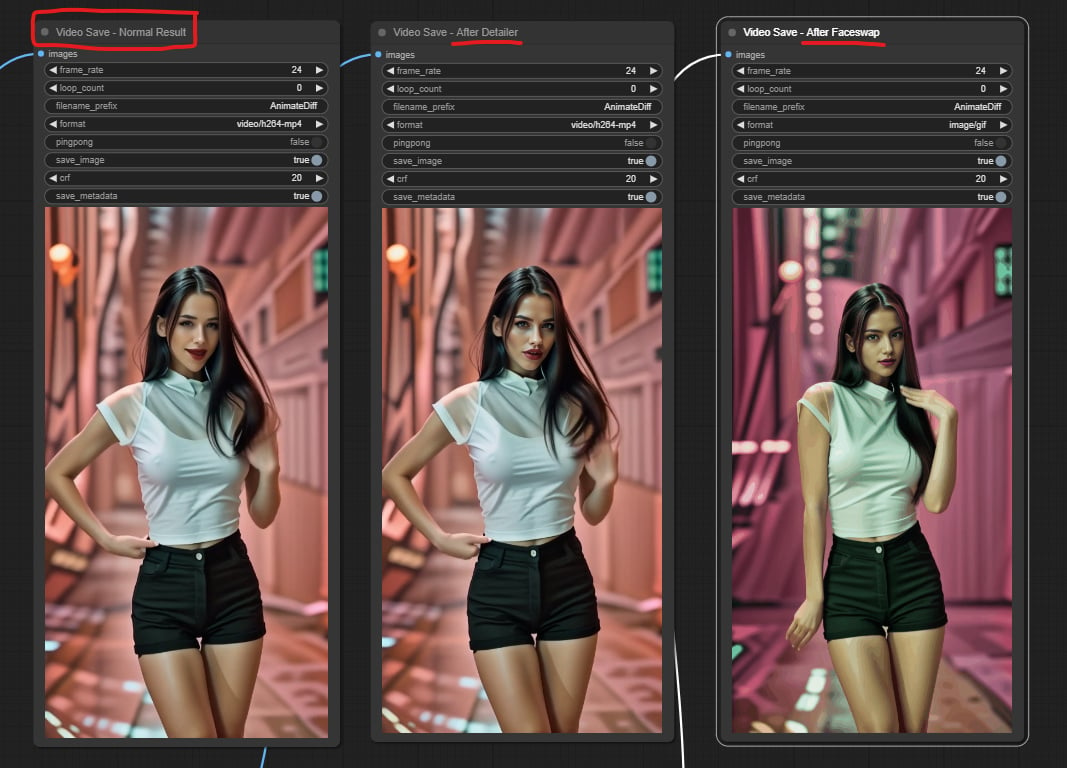

- Fixed Faceswap Nodes Group After Detailer , you can turn Off the ReActor Faceswap Node or just Bypass Group if you do not need face swap feature.

Version : 2023-12-13

- Add Detailer to enhance image frames quality.

- Added 3 Video Combine for result comparison.

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (10)

- latest (2 years ago)

- v20240109-164712

- v20231224-205959

- v20231218-215410

- v20231213-192653

- v20231213-160434

- v20231213-092524

- v20231213-090558

- v20231209-135038

- v20231206-185736

Node Details

Primitive Nodes (50)

GetNode (21)

IPAdapterApply (1)

IPAdapterApplyEncoded (1)

IPAdapterApplyFaceID (1)

InsightFaceLoader (1)

Integer (3)

Note (1)

PrepImageForInsightFace (1)

Reroute (2)

SetNode (18)

Custom Nodes (64)

- ADE_AnimateDiffUniformContextOptions (1)

- CheckpointLoaderSimpleWithNoiseSelect (1)

- ADE_AnimateDiffLoaderWithContext (1)

- CR Seed (1)

ComfyUI

- ImageScale (1)

- EmptyImage (1)

- LoraLoader (1)

- ModelSamplingDiscrete (1)

- PreviewImage (5)

- GrowMask (1)

- ImageCompositeMasked (1)

- ControlNetApplyAdvanced (3)

- LoadImage (6)

- CLIPVisionLoader (3)

- LoraLoaderModelOnly (1)

- VAELoader (1)

- CLIPSetLastLayer (1)

- CLIPTextEncode (1)

- VAEEncode (1)

- VAEDecode (1)

- ImageToMask (1)

- KSampler (1)

- MaskBlur+ (1)

- MaskFromColor+ (1)

- ImageCASharpening+ (1)

- SAMLoader (1)

- SEGSPaste (1)

- ToBasicPipe (1)

- UltralyticsDetectorProvider (1)

- ImpactSimpleDetectorSEGS_for_AD (1)

- SEGSDetailerForAnimateDiff (1)

- OneFormer-COCO-SemSegPreprocessor (1)

- DWPreprocessor (1)

- CannyEdgePreprocessor (1)

- LineArtPreprocessor (1)

- IPAdapterModelLoader (3)

- PrepImageForClipVision (1)

- IPAdapterEncoder (1)

ComfyUI_tinyterraNodes

- ttN text (1)

- ControlNetLoaderAdvanced (3)

- VHS_LoadVideo (1)

- VHS_VideoCombine (3)

- BatchPromptSchedule (1)

- ReActorFaceSwap (1)

- Image Resize (1)

Model Details

Checkpoints (1)

SD1_5\realisticVisionV60B1_v60B1VAE.safetensors

LoRAs (2)

SD1-5\lcm-lora-sdv1-5_lora_weights.safetensors

ip-adapter-faceid-plusv2_sd15_lora.safetensors