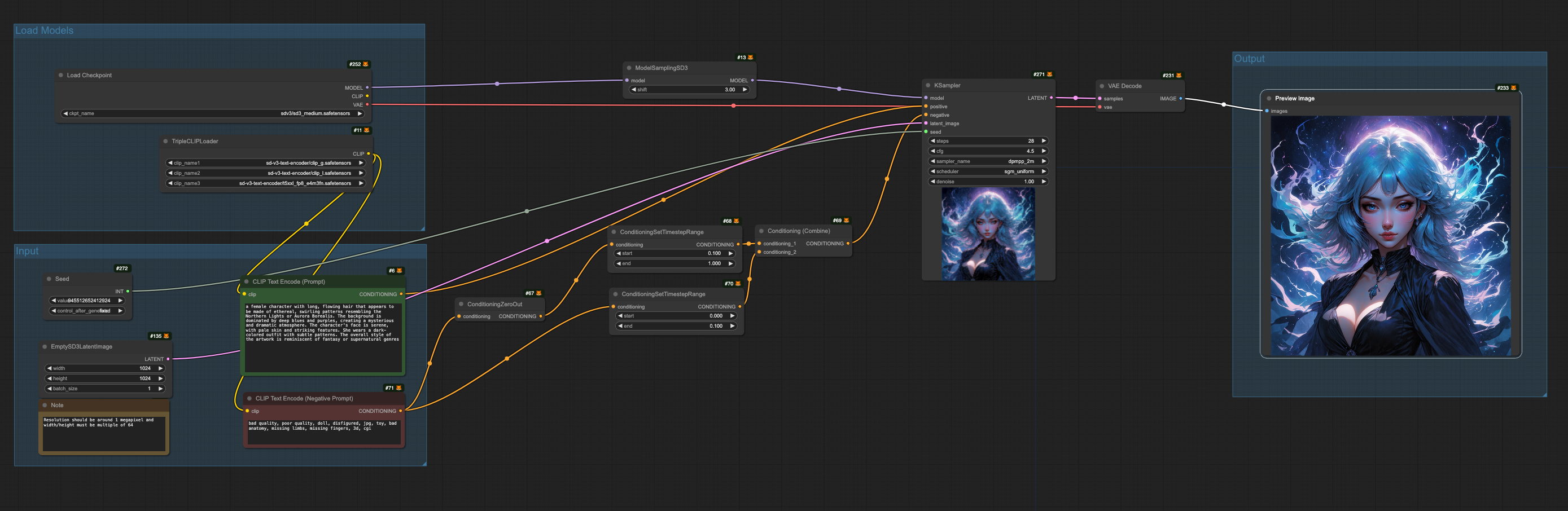

Stable Diffusion 3 Medium Workflow Example - Basic

5.0

0 reviewsDescription

Model

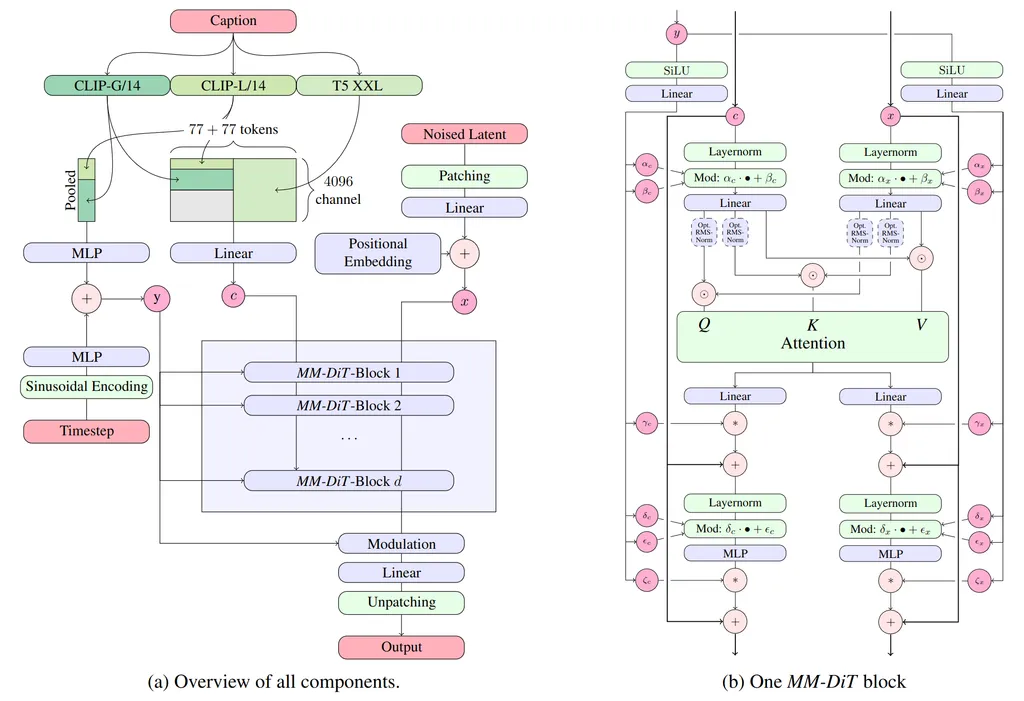

Stable Diffusion 3 Medium is a Multimodal Diffusion Transformer (MMDiT) text-to-image model that features greatly improved performance in image quality, typography, complex prompt understanding, and resource-efficiency.

For more technical details, please refer to the Research paper.

Please note: this model is released under the Stability Non-Commercial Research Community License. For a Creator License or an Enterprise License visit Stability.ai or contact us for commercial licensing details.

Model Description

- Developed by: Stability AI

- Model type: MMDiT text-to-image generative model

- Model Description: This is a model that can be used to generate images based on text prompts. It is a Multimodal Diffusion Transformer (https://arxiv.org/abs/2403.03206) that uses three fixed, pretrained text encoders (OpenCLIP-ViT/G, CLIP-ViT/L and T5-xxl)

License

- Non-commercial Use: Stable Diffusion 3 Medium is released under the Stability AI Non-Commercial Research Community License. The model is free to use for non-commercial purposes such as academic research.

- Commercial Use: This model is not available for commercial use without a separate commercial license from Stability. We encourage professional artists, designers, and creators to use our Creator License. Please visit https://stability.ai/license to learn more.

Model Sources

For local or self-hosted use, we recommend ComfyUI for inference.

Stable Diffusion 3 Medium is available on our Stability API Platform.

Stable Diffusion 3 models and workflows are available on Stable Assistant and on Discord via Stable Artisan.

- ComfyUI: https://github.com/comfyanonymous/ComfyUI

- StableSwarmUI: https://github.com/Stability-AI/StableSwarmUI

- Tech report: https://stability.ai/news/stable-diffusion-3-research-paper

- Demo: https://huggingface.co/spaces/stabilityai/stable-diffusion-3-medium

- Diffusers support: https://huggingface.co/stabilityai/stable-diffusion-3-medium-diffusers

Training Dataset

We used synthetic data and filtered publicly available data to train our models. The model was pre-trained on 1 billion images. The fine-tuning data includes 30M high-quality aesthetic images focused on specific visual content and style, as well as 3M preference data images.

File Structure

├── comfy_example_workflows/

│ ├── sd3_medium_example_workflow_basic.json

│ ├── sd3_medium_example_workflow_multi_prompt.json

│ └── sd3_medium_example_workflow_upscaling.json

│

├── text_encoders/

│ ├── README.md

│ ├── clip_g.safetensors

│ ├── clip_l.safetensors

│ ├── t5xxl_fp16.safetensors

│ └── t5xxl_fp8_e4m3fn.safetensors

│

├── LICENSE

├── sd3_medium.safetensors

├── sd3_medium_incl_clips.safetensors

├── sd3_medium_incl_clips_t5xxlfp8.safetensors

└── sd3_medium_incl_clips_t5xxlfp16.safetensors

We have prepared three packaging variants of the SD3 Medium model, each equipped with the same set of MMDiT & VAE weights, for user convenience.

sd3_medium.safetensorsincludes the MMDiT and VAE weights but does not include any text encoders.sd3_medium_incl_clips_t5xxlfp16.safetensorscontains all necessary weights, including fp16 version of the T5XXL text encoder.sd3_medium_incl_clips_t5xxlfp8.safetensorscontains all necessary weights, including fp8 version of the T5XXL text encoder, offering a balance between quality and resource requirements.sd3_medium_incl_clips.safetensorsincludes all necessary weights except for the T5XXL text encoder. It requires minimal resources, but the model's performance will differ without the T5XXL text encoder.- The

text_encodersfolder contains three text encoders and their original model card links for user convenience. All components within the text_encoders folder (and their equivalents embedded in other packings) are subject to their respective original licenses. - The

example_workfowsfolder contains example comfy workflows.

(see more in Stability AI's huggingface model page https://huggingface.co/stabilityai/stable-diffusion-3-medium)

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (2)

- latest (2 years ago)

- v20240612-232920

Node Details

Primitive Nodes (5)

EmptySD3LatentImage (1)

ModelSamplingSD3 (1)

Note (1)

PrimitiveNode (1)

TripleCLIPLoader (1)

Custom Nodes (10)

ComfyUI

- CLIPTextEncode (2)

- ConditioningSetTimestepRange (2)

- ConditioningZeroOut (1)

- ConditioningCombine (1)

- PreviewImage (1)

- VAEDecode (1)

- KSampler (1)

- CheckpointLoaderSimple (1)

Model Details

Checkpoints (1)

sdv3/sd3_medium.safetensors

LoRAs (0)