"FLUX STYLE TRANSFER (better version)" for 8GB Vram

5.0

0 reviewsDescription

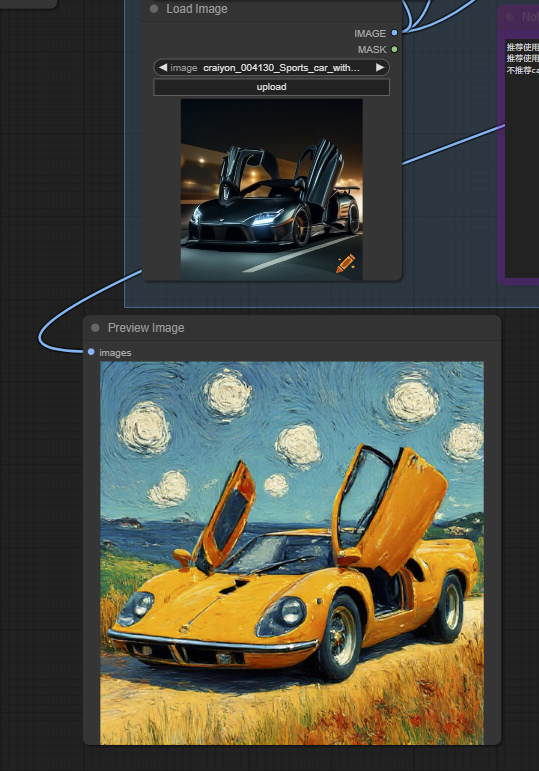

Optimized version of this workflow:

https://openart.ai/workflows/aigc101/flux-style-transfer-better-version/lJw1nuyXNaGckheGnvqF

For more general details please go there.

So what are the differences?

- I installed ComfyUI-GGUF to be able to use gguf models

- I used different models

- Profit

It's nothing special, but to make it easier finding them here are the models that are being used now:

t5xxl_fp8_e4m3fn.safetensors -> t5-v1_1-xxl-encoder-Q3_K_S.gguf

https://huggingface.co/city96/t5-v1_1-xxl-encoder-gguf/tree/main

flux1-dev-fp8.safetensors -> flux1-dev-Q2_K.gguf

https://huggingface.co/city96/FLUX.1-dev-gguf/tree/main

On my Geforce GTX 1070 (8GB Vram) it takes 5 Minutes to generate a Picture when all the resolution parameter are set to 512x512 as it is when you open the workflow for the first time.

When generating 1024x1024 for the first time, and the Controlnet Image needs to be generated for the first time in general, it is going to crash when it gets to the XLabs Sampler because loading in the Flux model is overflowing the VRam.

In that case just run it again, the controlnet image will already be generated and it should not overflow this time. (At least on my machine but Docker wont help us here sadly) It takes me 30-120 Minutes to Produce a 1024x1024 image.... So I think you can imagine why I was not able to test on other GPUs...

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (1)

- latest (10 months ago)

Node Details

Primitive Nodes (12)

ApplyFluxControlNet (3)

CLIPTextEncodeFlux (2)

DualCLIPLoaderGGUF (1)

LoadFluxControlNet (3)

Note (1)

UnetLoaderGGUF (1)

XlabsSampler (1)

Custom Nodes (11)

ComfyUI

- VAEDecode (1)

- PreviewImage (4)

- LoadImage (1)

- VAELoader (1)

- EmptyLatentImage (1)

- CannyEdgePreprocessor (1)

- HEDPreprocessor (1)

- DepthAnythingPreprocessor (1)

Model Details

Checkpoints (0)

LoRAs (0)