Background Replacer for Products & Portraits, Lighting Adjustment & Detail Preservation

4.0

4 reviewsDescription

Important: All versions of V1, V2 and V3, V4 can be downloaded by clicking the buttons on the right. From top to bottom are V4, V3, V2, V1.

This ComfyUI workflow is a powerful tool designed to help creators effortlessly replace the background of an image while ensuring that the subject blends seamlessly with the new environment. Whether you’re working on portraits, products, or complex compositions, this workflow combines cutting-edge models like SDXL and Flux to deliver stunning results with minimal effort. It’s ideal for creators who want high-quality background replacement without the performance hit of traditional methods.

New Features Compared to Previous Versions

- Faster, More Efficient: The new version introduces a lightning version of SDXL, which significantly reduces VRAM usage (now requiring only 6GB of VRAM) while retaining most of the detail. The lightning SDXL version allows for faster processing with just 10 sampling steps, making the relighting process more efficient and faster without sacrificing quality.

- Enhanced Background Removal: The new workflow utilizes BiRefNet and RMBG-2.0 models, which provide more accurate and efficient background removal, especially for fine details like hair and intricate subject edges. Additionally, you can toggle the “process_detail” setting to fine-tune the result for complex subjects, making it more adaptable.

- Realistic Background Generation: The integration of Flux-based fine-tuned checkpoint enhances background generation. This fine-tuning allows for a more realistic and detailed background while offering smoother transitions between the subject and the new environment.

- Greater Masking Control: The updated version adds more customization options for masking and painting, including the ability to edit masks in real-time using the “Mask Editor” and paint over areas to refine details like hair and subject edges.

Video Tutorial and Model Installation

-----------------------------------------------------------

Key Differences Between ‘Flux Background Replacer V2’ and ‘Flux Background Replacer V3’:

- Node Count and Complexity: Flux Background Replacer V2: A highly complex workflow featuring over 108 nodes, ideal for scenarios where precise image manipulation and blending are essential. Flux Background Replacer V3: With a more streamlined design, V3 contains 80 nodes, providing a simpler and more efficient workflow without compromising on quality.

- ControlNet Model and Edge Handling: Flux Background Replacer V2: Utilizes the ControlNet Canny model from Xlabs, which is specifically designed for advanced edge detection and outline preservation. This, combined with its relighting function, allows for photorealistic integration of subjects into the new background. Flux Background Replacer V3: Uses the ControlNet Depth model and Upscaler model from Jasper AI, optimized to control edges and outlines without the need for additional specialized nodes. This makes it easy to use the ‘Apply ControlNet’ node, saving time and simplifying setup.

For a detailed walkthrough, watch the Youtube video tutorial:

B站主页:https://space.bilibili.com/3546611913329493

-------------------------------------------------------------------------------------------------------------------

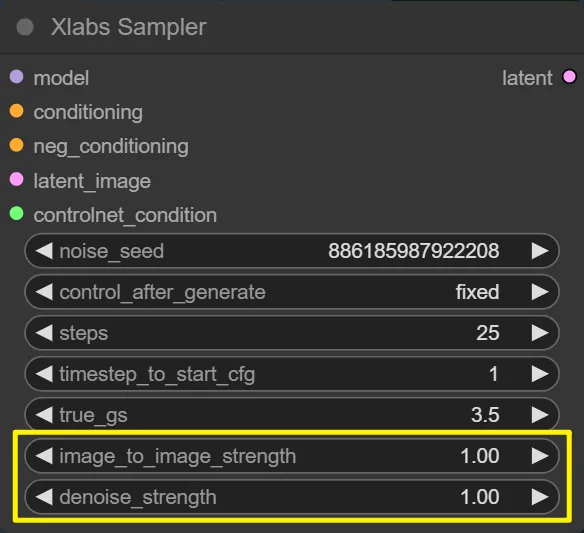

V2 Update: Fixed bug due to Xlabs Sampler. The new Xlabs Sampler has a new parameter, “Denoising Strength”. Don't forget to set “image_to_image_strength” to 1.

------------------------------------------------------------------------------------------------------------------

Welcome to a revolutionary ComfyUI workflow designed to simplify the process of changing photo backgrounds, whether for products, people, or various objects. This workflow harnesses the power of Flux models to create seamless background replacements, allowing for creative and professional edits with just a few steps.

Ideal for portrait photographers, product photographers, digital artists, and content creators, this workflow enhances images while preserving essential details, offering versatility and precision.

Functionality

The ComfyUI workflow specializes in changing backgrounds without compromising the integrity of the subject. It operates through five distinct node groups, each dedicated to a specific task:

- Background Removal and Subject Placement: Isolates the subject and positions it on a gray reference background.

- Background Generation: Utilizes Flux models to create a new background that fills the area behind the subject.

- Relighting: Adjusts lighting and shadows on the subject to match the new background for a cohesive look.

- Repainting: Refines the subject’s appearance by restoring lost details and enhancing image quality.

- Detail Restoration: Ensures all fine details are crisp and natural, bringing out the best in both the subject and the background.

This workflow not only changes backgrounds but also manages lighting, shadows, and details, making it a comprehensive tool for creating high-quality images.

Here are some examples:

Watch the tutorial to see these capabilities in action and follow along to master this powerful workflow.

Youtube: https://youtu.be/dkkyrv53Rp8

By following these steps and utilizing the resources provided, you can create stunning images with new backgrounds effortlessly. Happy editing!

i

Discussion

(No comments yet)

Loading...

Reviews

No reviews yet

Versions (4)

- latest (a year ago)

- v20241007-031401

- v20240906-013051

- v20240902-083659

Node Details

Primitive Nodes (56)

Anything Everywhere (4)

Anything Everywhere3 (1)

DF_Image_scale_by_ratio (1)

Fast Groups Bypasser (rgthree) (7)

GetNode (9)

Image Comparer (rgthree) (6)

JWIntegerMin (1)

LayerColor: AutoAdjustV2 (1)

LayerMask: BiRefNetUltraV2 (2)

LayerMask: LoadBiRefNetModelV2 (1)

Note (1)

Prompts Everywhere (1)

Reroute (13)

SetNode (5)

SetUnionControlNetType (1)

SimpleCondition+ (1)

easy isMaskEmpty (1)

Custom Nodes (50)

- CR Image Input Switch (1)

ComfyUI

- CheckpointLoaderSimple (1)

- InvertMask (1)

- PreviewImage (6)

- VAEDecode (2)

- DifferentialDiffusion (1)

- ControlNetApplyAdvanced (2)

- ImageCompositeMasked (3)

- InpaintModelConditioning (1)

- ImageBlur (1)

- KSampler (2)

- LoadImage (1)

- EmptyLatentImage (1)

- CLIPTextEncode (2)

- ControlNetLoader (1)

- easy imageSize (3)

- easy imageDetailTransfer (2)

- MaskPreview+ (2)

- SDXLEmptyLatentSizePicker+ (1)

- MaskBlur+ (1)

- PreviewBridge (1)

- LamaRemover (1)

- LayerMask: MaskGrow (4)

- LayerUtility: ImageScaleByAspectRatio V2 (1)

- LayerUtility: ImageBlendAdvance V2 (1)

- LayerColor: Exposure (1)

- LayerUtility: ColorImage V2 (1)

- CannyEdgePreprocessor (1)

- FloatSlider (3)

- ImageAndMaskPreview (1)

Model Details

Checkpoints (1)

realvisxlV50_v50LightningBakedvae.safetensors

LoRAs (0)